Abstract

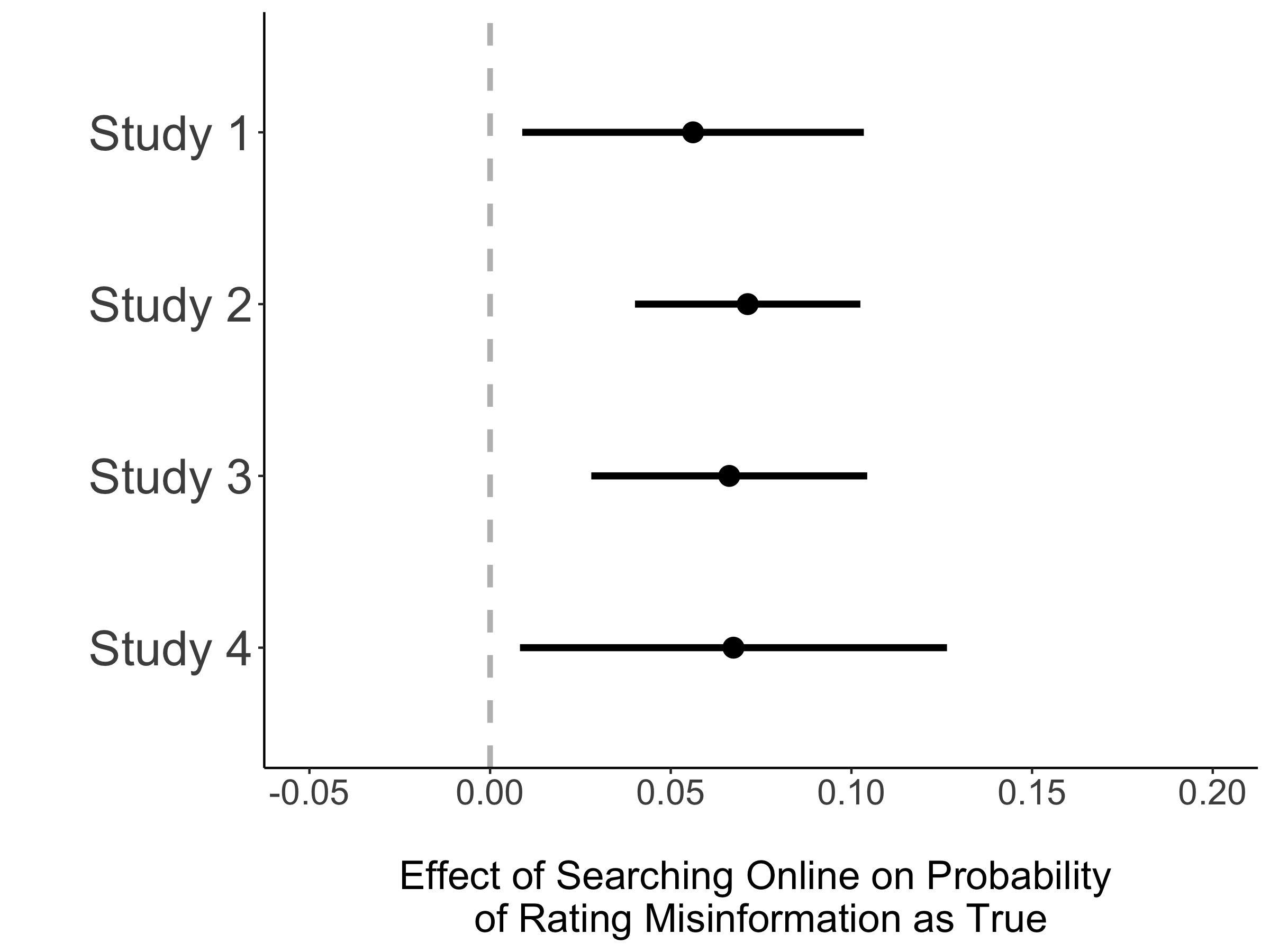

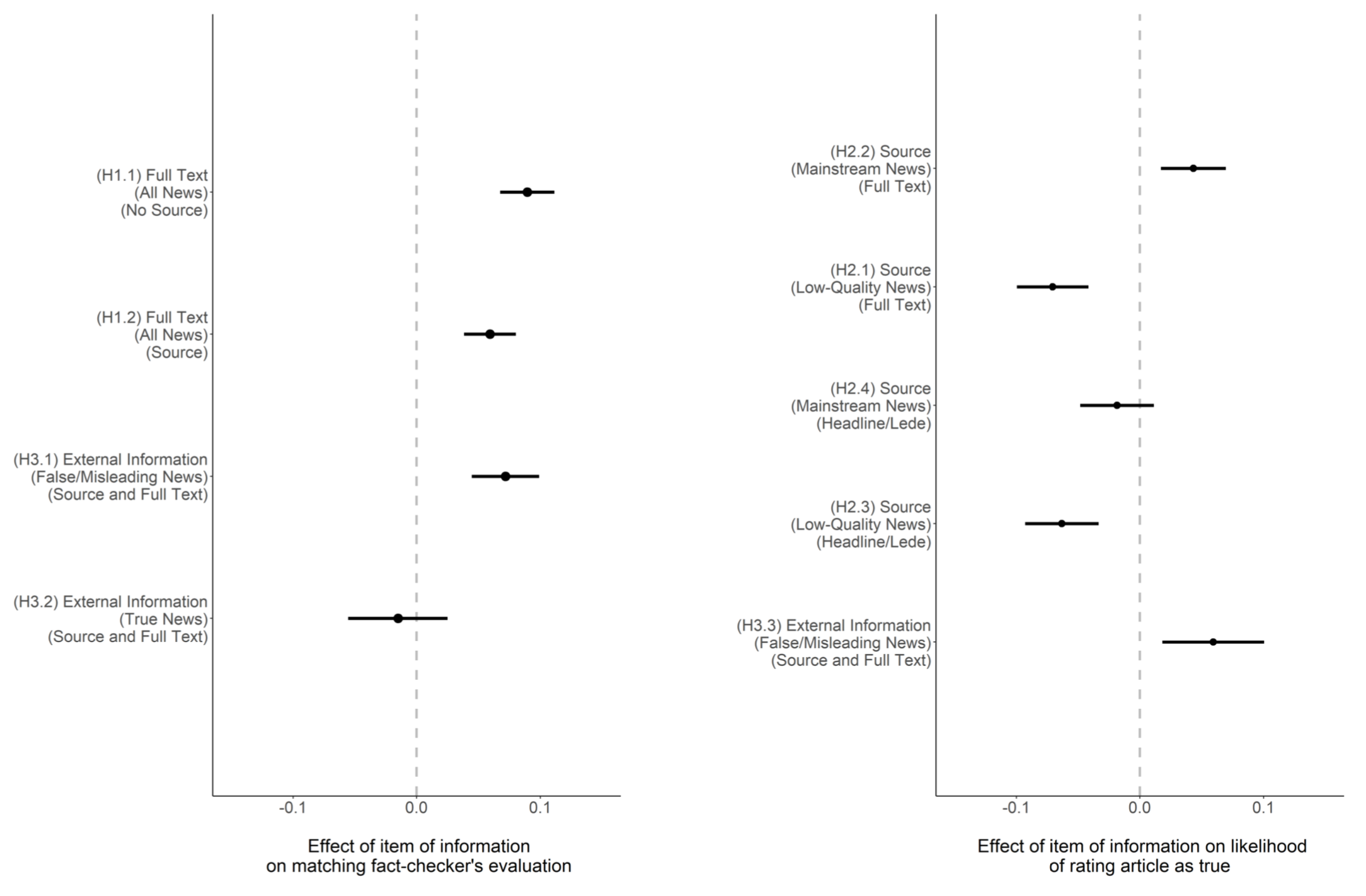

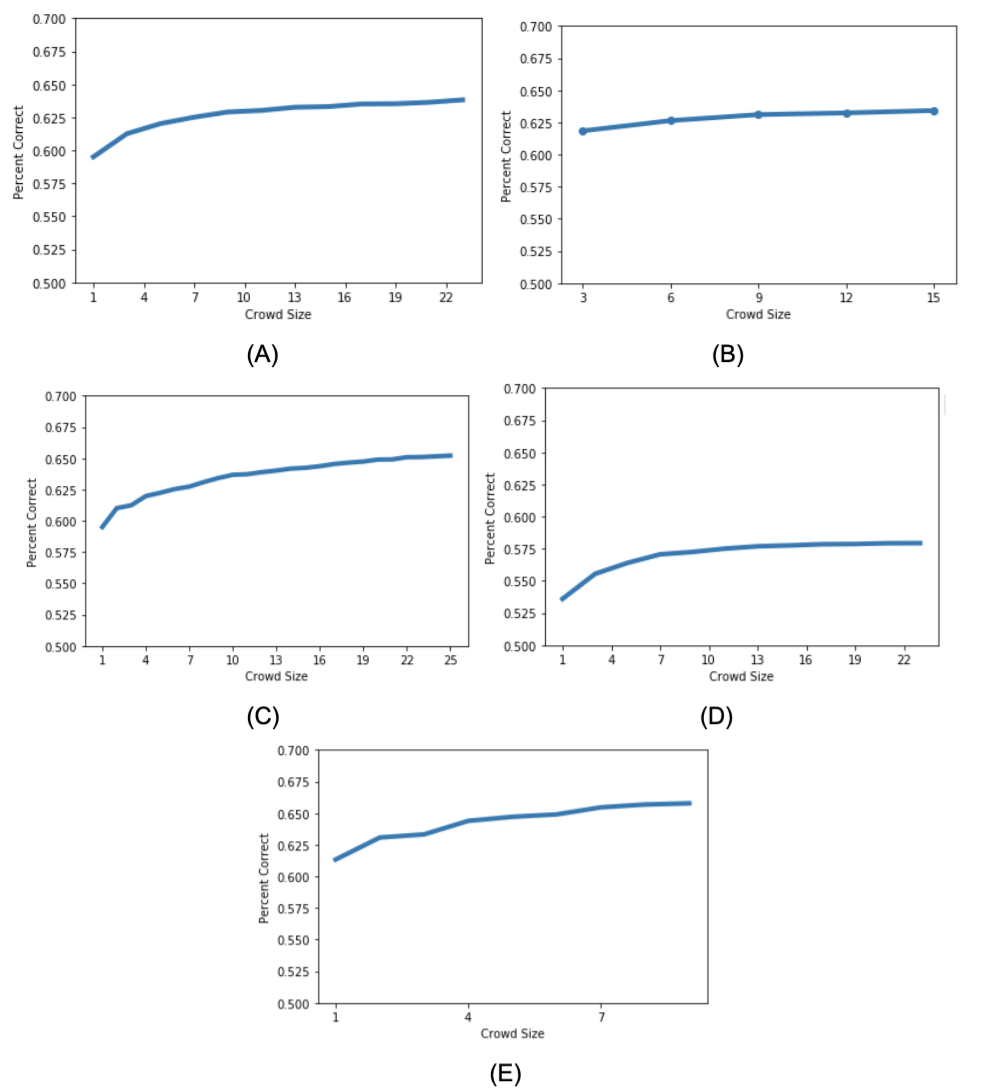

Despite broad adoption of digital media literacy interventions that provide online users with more information when consuming news, relatively little is known about the effect of this additional information on the discernment of news veracity in real-time. Gaining a comprehensive understanding of how information impacts discernment of news veracity has been hindered by challenges of external and ecological validity. Using a series of pre-registered experiments, we measure this effect in real-time. Access to the full article relative to solely the headline/lede and access to source information improves an individual's ability to correctly discern the veracity of news. We also find that encouraging individuals to search online increases belief in both false/misleading and true news. Taken together, we provide a generalizable method for measuring the effect of information on news discernment, as well as crucial evidence for practitioners developing strategies for improving the public's digital media literacy.

Abstract

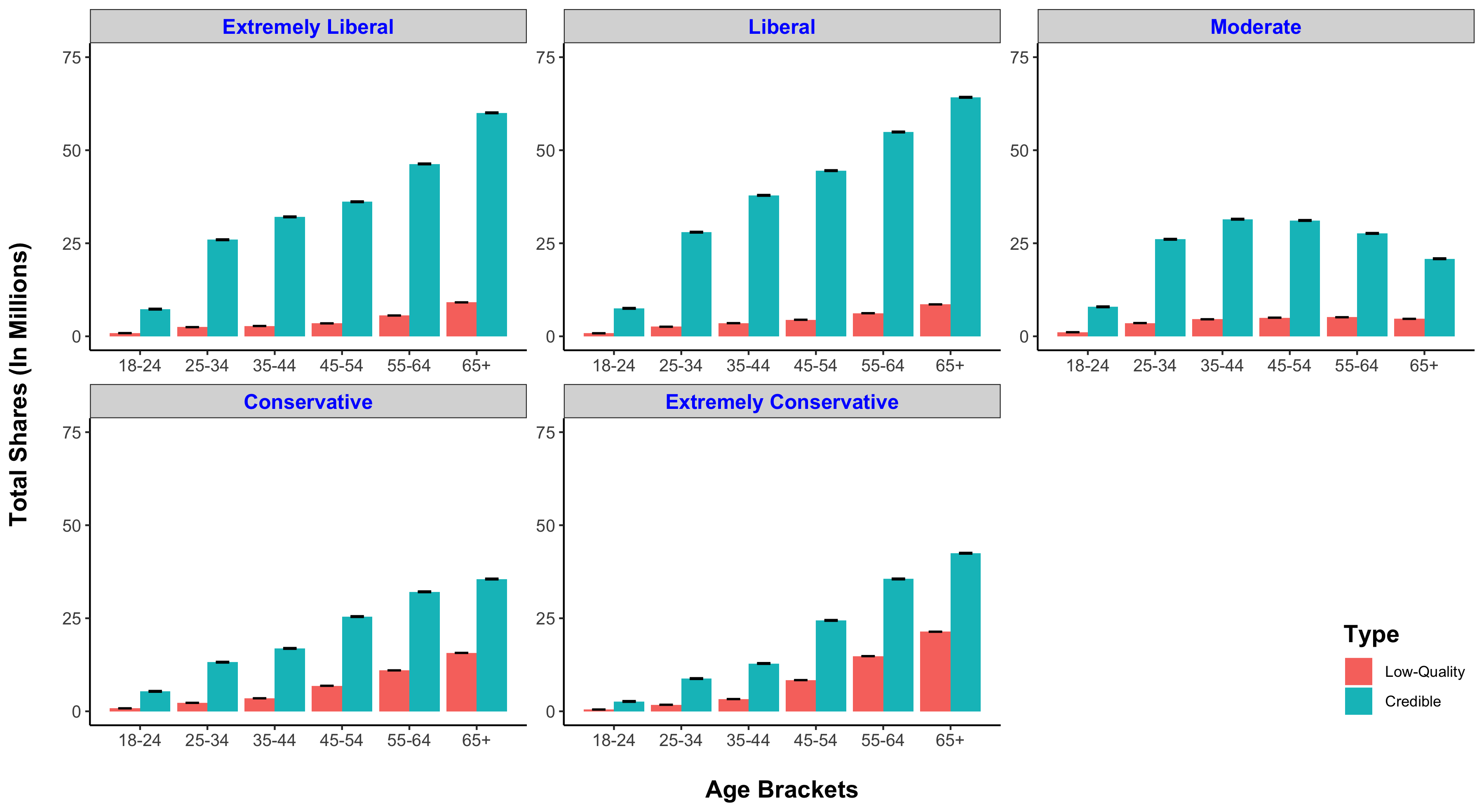

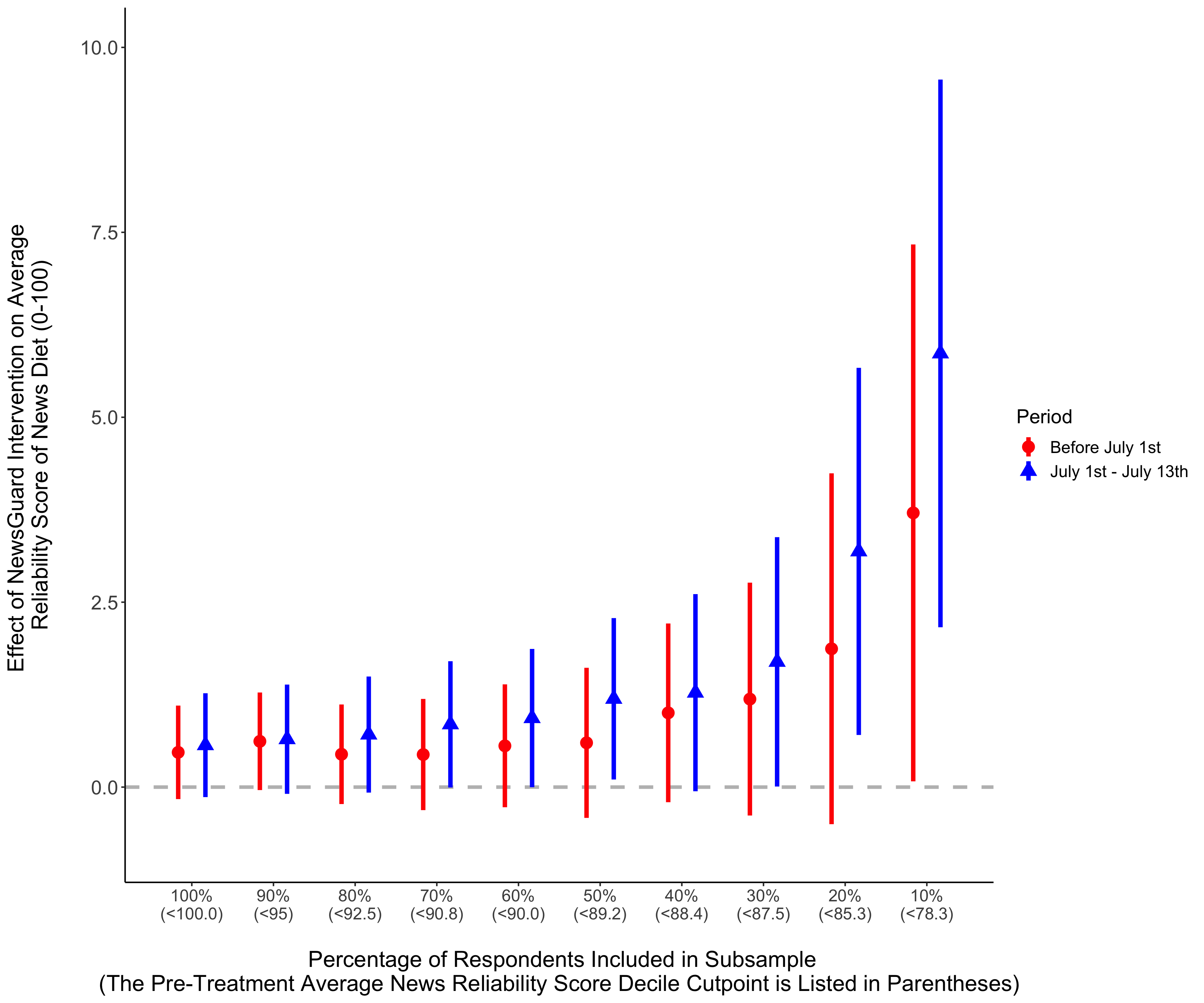

As the primary arena for viral misinformation shifts toward transnational threats such as the Covid-19 pandemic, the search continues for scalable, lasting countermeasures compatible with principles of transparency and free expression. To advance scientific understanding and inform future interventions, we conducted a randomized field experiment evaluating the impact of source credibility labels embedded in users' social feeds and search results pages. By combining representative surveys and digital trace data from a subset of respondents, we provide a rare ecologically valid test of such an intervention on both attitudes and behavior. On average across the sample, we are unable to detect changes in real-world consumption of news from low-quality sources after three weeks. However, we present suggestive evidence of a substantively meaningful increase in news diet quality among the heaviest consumers of misinformation in our sample.

Abstract

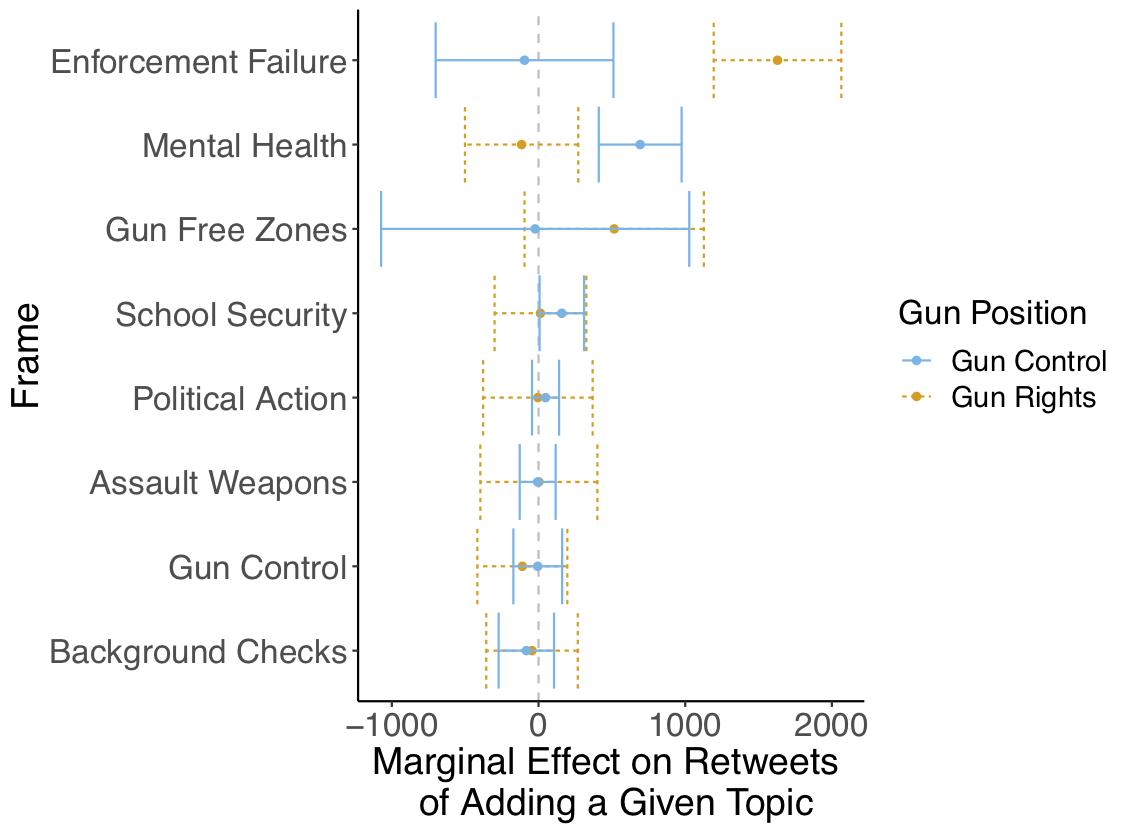

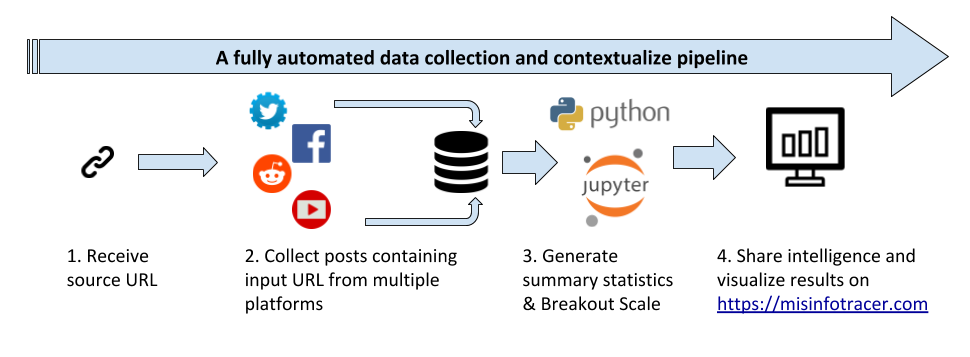

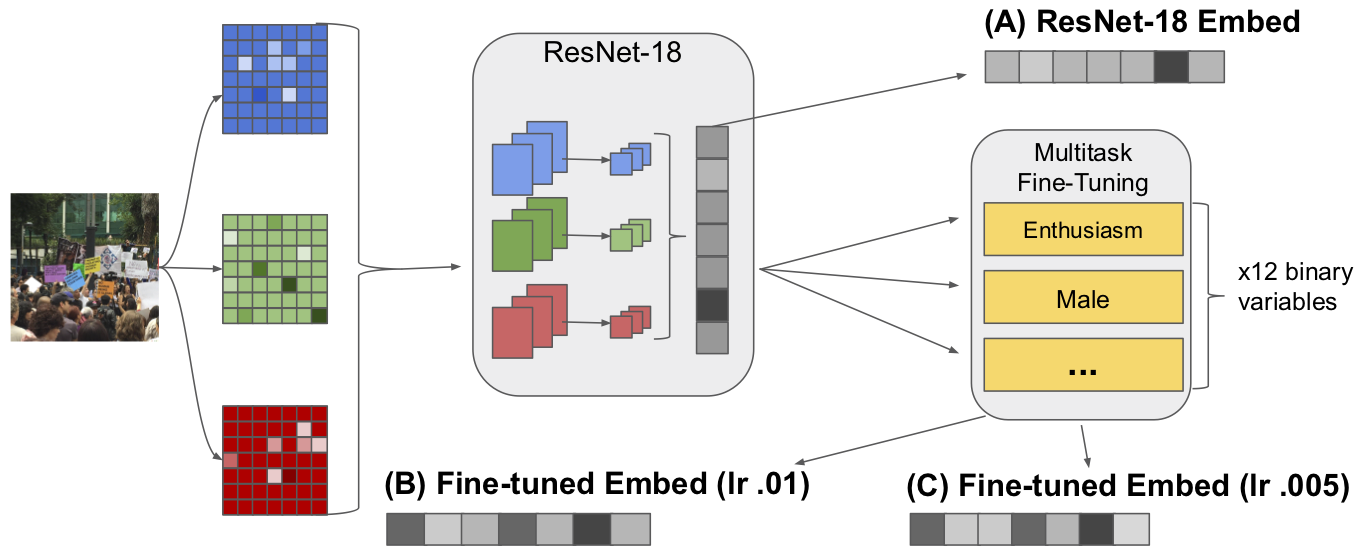

Identifying and tracking the proliferation of misinformation, or fake news, poses unique challenges to academic researchers and online social networking platforms. Fake news increasingly traverses multiple platforms, posted on one platform and then re-shared on another, making it difficult to manually track the spread of individual messages. Also, the prevalence of fake news cannot be measured by a single indicator, but requires an ensemble of metrics that quantify information spread along multiple dimensions. To address these issues, we propose a framework called Information Tracer, that can (1) track the spread of news URLs over multiple platforms, (2) generate customizable metrics, and (3) enable investigators to compare, calibrate, and identify possible fake news stories. We implement a system that tracks URLs over Twitter, Facebook and Reddit and operationalize three impact indicators–Total Interaction, Breakout Scale and Coefficient of Traffic Manipulation–to quantify news spread patterns. We also demonstrate how our system can discover URLs whose spread pattern deviate from the norm, and be used to coordinate human fact-checking of news domains. Our framework provides a readily usable solution for researchers to trace information across multiple platforms, to experiment with new indicators, and to discover low-quality news URLs in near real-time.